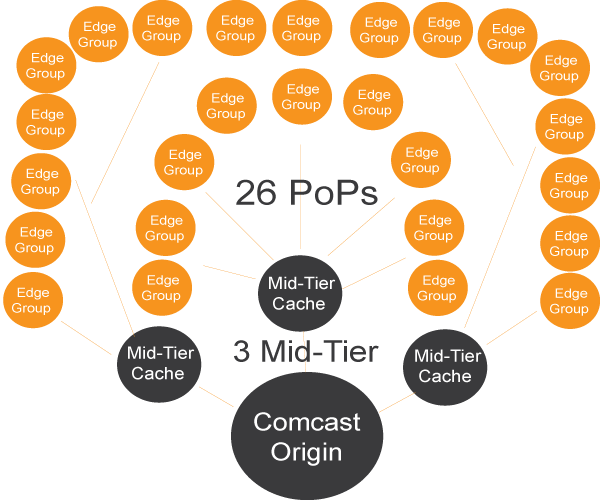

Back in April 2014, Comcast Chief CDN Engineer, Jan van Doorn presented “Comcast CDN Architecture”. Comcast is likely the only Cable Operator in the world to have built its own CDN platform from scratch with open source software. Apache Traffic Server is the caching engine that powers delivery, and a custom built health protocol application and monitoring system was developed to round out the functionality. In April 2014, Comcast had 26 PoPs, 250 cache servers, 3 mid-tier locations, and delivered 1.5PB to 2PB per day. For reporting, Comcast used Splunk instead of building its own. Jan van Doorn planned on doubling capacity by the end of 2014. Here are bullet points on Comcast CDN.

Four Pillars of Comcast CDN

- Content Router: goal is to direct customers to best cache.

- routing decisions based on 1) Distance / Network hop 2) Network Cost 3) Network Link Quality 4) Content Availability

- DNS Content Routing and IP Address used

- Health Protocol: Built in-house using Apache Tomcat Application; system pulls health data every 8 seconds

- Management & Monitoring System: Built in-house in Perl / Mojolicious framework

- Reporting System: Based on Splunk, where the logs act as the billing system

Comcast CDN PoP Diagram

Key Takeaways

- Comcast is moving all legacy video to IP

- Based on Apache Traffic Server (ATS)

- Large video files are broken into 2 to 6 second chunks

- Average size of object (chunk) is 1MB

- 4Mbps – 6Mbps of bandwidth for video delivery to laptop

- 8Mbps bandwidth for video delivery over large screen

- CDN supports Live TV and VoD

- No clustering used

- Criteria for building CDN: No vendor lock-in, open standards, horizontal scale, cost effective, IPv6 support from start

- 26 PoPs (Cache Groups / Edge Locations)

- 250 Cache Servers deployed

- 3 Mid-tier Cache Locations is forward proxy; application and network ACLs are used for security

- Edges are reverse proxy’s and mid-tier are forward proxies

- Daily traffic average is 240Gbps and Peak 360Gbps

- 1.7Tbps of edge capacity

- April 2014 – 8% traffic was IPv6; goal is 30% by end of 2014

Comparison Between Comast and Faslty CDN Server

| Comcast Server Specs |

Fastly Server Specs |

|

|