Varnish has been on the rise as one of the world’s leading reverse proxy servers with high profile sites like Wikipedia, Facebook, Twitter and Vimeo all using the Varnish software. In this article, we’ll break down the key specifics that make Varnish software unique, with a guide to the features and functionality to help you decide whether or not the Varnish software best suites your business needs.

What sets Varnish apart from its counterparts like Apache TS and Nginx is that Varnish was exclusively written as an HTTP web accelerator to speed up traffic to websites. Unlike the others, it cannot function as a web server, only as reverse proxy, which comes with some major advantageous, especially for sites that are dynamic content heavy. In addition to serving up faster speeds as a reverse proxy, Varnish can also be used as a WAF, DDoS defender, load balancer, integration point, single sign-on gateway, authentication and authorization policy mechanism, quick fix for unstable backends and an HTTP router. But in order to understand and better utilize all the features it offers, we need to begin by looking at the design.

Designed for Modern Hardware Features

One key distinction all users need to know is that Varnish was designed specifically for modern hardware, which means it requires a 64-bit architecture for optimal usage. In order to achieve accelerated speeds, Varnish uses a workspace-oriented memory-model instead of allocating the exact amount of space it needs at run-time. This delegates the task of memory management to the OS, which can better decide what threads are ready to execute and when. Trying to use Varnish with a 32-bit system will diminish the results, allowing you less virtual memory space and less threads.

Varnish also uses its own Varnish Configuration Language (VCL), which gives practical freedom to the system administrator by using the VCL to decide how to interact with the system instead of having a developer try to predict each caching scenario. For text editing, Varnish allows you to write in C code then translates it into VCL itself, allowing you to specify and modify the features with more ease. Varnish also offers modules, known as VMODs, coded in VCL and C programming language, which let you extend the functionality by adding in custom features.

Also unique to its design is how Varnish stores information in a SHared Memory LOG (SHMLOG). This allows Varnish to log large amounts of information with little to no cost by having other applications on your OS analyze the data and extract the useful pieces, which decreases the chances of deadlocking given the heavily threaded architecture. Content is stored in the SHMLOG using hash key designations, which the administrator can control. Varnish has a key/value store in its core, allowing you to control the input of the hashing algorithm, even allowing multiple objects to have the same hash key.

The Set-Up

Since Varnish only acts as the reverse proxy, administrators need to first set-up a backend server to fetch the content, which can be any service that understands HTTP, although most of the manuals favor the usage of Apache. Also integral to the set-up is that your server has the right OS. Since it will be pulling a lot of files and data from the OS, only certain systems have the right compatibility, with most BSD, Linux and Unix operating systems favored for usage.

Basic Configuration

The first step to getting Varnish on your server is to install Apache as the backend and add it to the default setting through default.vcl as seen below.

vcl 4.0;

backend default {

.host = “127.0.0.1”;

.port = “8080”;

}

When configuring this, make sure to start Varnish on port 8080 in order to connect Apache as the backend, otherwise Varnish will not show an error message during installation. After setting this up and restarting Apache, it’s time to install Varnish, first making sure to have apt-transport-https and curl, which can be found using the Varnish online repository. Software for Varnish Cache Plus and all VMODs are also available in RedHat and Debian package repositories here.

To assist with the process, below are some basic command line configurations. All the options are listed on the varnishd(1) manual page, with specifications to help guide the installation process. Due in part to its wide usage online, Varnish also has several online tutorials to help walk you through the details of editing any text and configuring it all correctly.

| -a <[hostname]:port> | Listening address and port for client requests |

| -f <filename> | VCL file |

| -p <parameter=value> | Set tunable parameters |

| -S <secretfile> | Shared secret file for authorizing access to the management interface |

| -T <[hostname]:port> | Listening address and port for the management interface |

| -s <[storagetype,options> | Where and how to store objects |

The Varnish Architecture

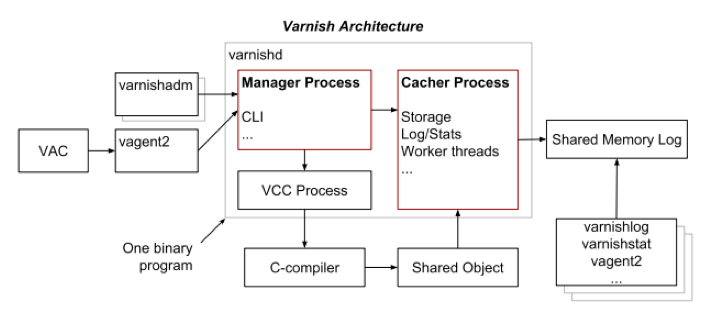

To help you run the software, Varnish breaks down its architecture using utility programs that perform and manage various features. In order to help understand the function of each program, below is a diagram mapping out the basic structure.

In the central block where the processes take place is the Varnish daemon: varnishd. This utility program accepts HTTP requests from clients, sends the requests to a backend and caches the returned objects. The utility program varnishadm runs a command line interface (CLI) connection to varnishd, performing functions such as starting and stopping varnishd, changing configuration parameters, reloading VCL, and more. The varnish agent, vagent2, is an open source HTTP REST interface that allows varnishd to be remotely monitored as well as offering other features, which include VCL uploading, downloading, banning, persisting (storing to disk), parameter viewing, showing panic messages, starting and stopping varnishd.

The varnishlog configuration allows you to access the log, which contains specific data and information about clients and their requests, with varnishstat being used to access global counters. And as stated before, the SHared Memory LOG (SHMLOG) logs all of this information with special memory log overwriting capabilities that circulate out the older data as the log fills to capacity. While there might not be any historic data in the log, the constant filtering allows for an abundance of information to be accessible at very high speed.

Inside the central block, two main processes are taking place. The first one is the manager process, which is responsible for delegating the tasks by providing a CLI to the child process in order to initiate caching. The main function of the manager is to ensure that there is always a process for each task and that the cacher is always functioning. For security purposes, Varnish is specially equipped so that if malware compromises the system, the manager will make sure the cacher restarts again every few seconds as a front line of defense..

The other main process, referred to as the child process, is the more vulnerable of the two given the fact that it interfaces with the clients. Its main function is to listen to client requests, manage worker threads, store caches, log traffic and update the counter for statistics. In order to protect the system, there is no backwards communication between the child to the manager, instead if a security threat is detected by the child it immediately logs a panic message in the SHMLOG to alert the administrator.

Threading Model

The child process functions to cache content by running multiple threads in two thread pools. When a connection is accepted, it is put into a thread pool that then either delegates the connection to an available thread, holds the request, or drops the connection if the queue is at capacity. Each client request is served by a single worker thread and when that thread expires, the old content is removed from the cache. According to Varnish this two thread pool model has proven to be able to handle all given requests by even the busiest of servers.

Varnish also has the ability to spawn new worker threads on demand to satisfy heavier traffic, and subsequently remove them once the load has been reduced. For energy efficiency, Varnish recommends keeping a few threads idle during normal traffic in order to handle the occasional spikes, which, as long as you’re running it on a 64-bit system, will prove to be more efficient than constantly spawning and destroying threads as demand fluctuates.

Cache Invalidation

When setting up the cache policy, Varnish automatically invalidates expired objects, but you can also set it up to pro-actively invalidate specific objects with four different options.

- HTTP Purge—invalidates caches explicitly using objects hashes. One specific object with all its variants. Frees memory immediately with high scalability and low flexibility. Good if you know exactly what to remove. Leaves it to the next client to refresh the content

- Banning—invalidates objects in cache that match the regular expression. Does not necessarily free up memory at once. Prohibits it from serving up specific content from cache

- Force cache misses—Varnish disregards any existing objects and always fetches or re-fetches content from the backend. May create multiple objects as a side effect . No scalability, memory usage actually increases. Useful to refresh slowly generated content

- Hashtwo—only available with varnish plus. Useful for websites with the need for cache invalidation at very large scale. Invalidates based on cache tags distributed as a VMOD only

Varnish also offers a unique way of saving a request, called grace mode. A graced object is an object that expired but is still kept in the cache. Grace mode is a feature to mitigate thread pile-ups, allowing Varnish to continue serving requests when the backend cannot. This is most effective when no healthy backend is available, which helps to avoid request pile ups when a popular object has expired in the cache. If a request is waiting for new content, Varnish will deliver graced objects instead of queuing incoming requests.

Optimizing Varnish for Your Purposes

All this information regarding the architecture of Varnish is essential to integrating the software for your own uses. Varnish has several different parameters that can be adjusted to better serve different workloads, such as adjusting the amount of threads Varnish makes available, adjusting the outgoing ports and number of file descriptors based on the operating system, specifying the storage based on the RAM capabilities and so on. These parameters can be adjusted using varnishadm or using the command varnishd -p param=value to access the default parameter settings. Also, if you decide to change these parameters make sure to store changes in startup script so they aren’t lost when Varnish restarts.

One of the most important factors to consider when tuning Varnish to your own needs, is understanding and writing effective VCL code. All caching in Varnish is performed based on rules written in VCl. In terms of differences, it looks like C and compiles into C when Varnish runs it, but has its own system variables, system functions and if-statements. VCL also has no user defined variables or functions, and no looping structure, meaning each request proceeds through predefined but configurable subroutines.

With a baseline understanding of this architecture, configuration and tuning capabilities of your Varnish software, you can utilize this reverse proxy server to best suite your own web server. And while each company might require varying demands from their reverse proxy, hopefully this guide will help you to better understand the functionality and features Varnish has to offer.