AI Chips: NPU vs. TPU

NPUs and TPUs

Neural processing units (NPUs) and tensor processing units (TPUs) are specialized hardware accelerators that are designed to accelerate machine learning and artificial intelligence (AI) workloads. NPUs and TPUs are optimized for the mathematical operations that are commonly used in machine learning, such as matrix multiplications and convolutions, and they can be used to accelerate a wide range of machine learning tasks, including image classification, object detection, natural language processing, and speech recognition.

Both NPUs and TPUs are highly efficient and powerful resources for machine learning, but they do have some limitations, such as availability, compatibility, cost, and flexibility. In general, NPUs and TPUs are important tools that can be used to improve the performance and efficiency of machine learning applications.

- Neural processing units (NPUs) and tensor processing units (TPUs) are both types of hardware accelerators that are designed to accelerate machine learning and artificial intelligence (AI) workloads. Both NPUs and TPUs are optimized for the mathematical operations that are commonly used in machine learning, such as matrix multiplications and convolutions, and they can be used to accelerate a wide range of machine learning tasks.

- There are some differences between NPUs and TPUs. One key difference is that TPUs are specifically designed to accelerate deep learning tasks, while NPUs can accelerate a broader range of machine learning algorithms. TPUs are also developed by Google and are only available on the Google Cloud Platform, while NPUs can be developed and used by any company or organization.

- In terms of performance, both NPUs and TPUs are highly efficient and powerful resources for machine learning. However, TPUs may have a slight performance advantage due to their specific optimization for deep learning tasks. It is also worth noting that the specific performance of an NPU or TPU will depend on its design and implementation.

- Overall, both NPUs and TPUs are valuable resources for machine learning and AI, and they can be used to improve the performance and efficiency of machine learning applications. The choice between an NPU and a TPU will depend on the specific needs and goals of the application and the available resources.

What is Neural Processing Unit (NPU)?

An NPU, or Neural Processing Unit, is a type of specialized hardware accelerator that is designed to perform the mathematical operations required for machine learning tasks, particularly those involving neural networks. NPUs speed up the training and inference phases of deep learning models, allowing them to run more efficiently on many devices.

NPUs are similar to other hardware accelerators, such as GPU (Graphics Processing Unit) and TPU (Tensor Processing Unit), but they are specifically optimized for tasks related to artificial neural networks. They are typically used with a central processing unit (CPU) to provide additional processing power for machine learning tasks.

NPUs can be found in a variety of devices, including smartphones, tablets, laptops, and other types of computing devices. They are often used to improve the performance of machine learning applications such as image and speech recognition, natural language processing, and other types of artificial intelligence workloads.

NPUs are optimized for the mathematical operations commonly used in machine learning, such as matrix multiplications and convolutions. These operations are used in many machine learning algorithms, including deep learning algorithms, which are a type of neural network that is made up of multiple layers of interconnected nodes.

Matrix multiplications and convolutions are used to process and analyze large datasets, and they are computationally intensive operations that require a lot of processing power. NPUs are designed to efficiently execute these operations, making them well-suited for machine learning tasks that involve large amounts of data.

In addition to matrix multiplications and convolutions, NPUs can also support other types of mathematical operations, such as element-wise operations and activation functions. Element-wise operations involve applying a mathematical operation to each element in an array or matrix, and activation functions are used to introduce nonlinearity in neural networks.

NPUs can also support other types of machine learning algorithms, such as support vector machines (SVMs) and decision trees, which involve different types of mathematical operations. Intel Nervana processor is below.

Features

- High performance: NPUs are designed to be highly efficient and performant, allowing them to speed up the training and inference phases of deep learning models.

- Specialized design: NPUs are specifically optimized for tasks related to artificial neural networks, such as image and speech recognition, natural language processing, and other machine learning workloads.

- Power efficiency: NPUs are designed to be power efficient, allowing them to run for long periods without consuming much power.

- Hardware acceleration: NPUs can accelerate the performance of machine learning tasks, providing a significant boost in performance compared to using a CPU alone.

- Flexibility: NPUs can be used in a variety of devices, including smartphones, tablets, laptops, and other types of computing devices, making them versatile hardware accelerators.

Limitations

While NPUs have many benefits and can significantly improve the performance of machine learning tasks, they do have some limitations:

- Limited availability: NPUs are not as widely available as other hardware accelerators, such as GPUs (Graphics Processing Units). This means that not all devices may have an NPU available for use.

- Compatibility: NPUs may not be compatible with all machine learning software and frameworks. For example, some NPUs may only be compatible with certain types of neural network architectures or may require the use of specific software libraries.

- Cost: NPUs can be expensive to produce, which may make them cost-prohibitive for some users.

- Complexity: NPUs can be complex to design and implement, requiring specialized knowledge and expertise.

- Limited scalability: NPUs may not be able to scale as easily as other types of hardware accelerators, such as GPUs, which can be used in distributed computing environments. This may limit their ability to handle very large and complex machine-learning tasks.

What is Tensor Processing Unit (TPU)?

A tensor processing unit (TPU) is a specialized hardware designed to accelerate machine learning and artificial intelligence (AI) workloads. It is a type of accelerator that is specifically optimized to perform the mathematical operations that are used in deep learning algorithms, which are a type of neural network that is made up of multiple layers of interconnected nodes.

TPUs are designed to be highly efficient at executing matrix multiplications and convolutions, two of the most computationally intensive operations in deep learning. They are also able to support other types of mathematical operations, such as element-wise operations and activation functions, and they can be used to accelerate a wide range of machine learning tasks, including image classification, object detection, natural language processing, and speech recognition.

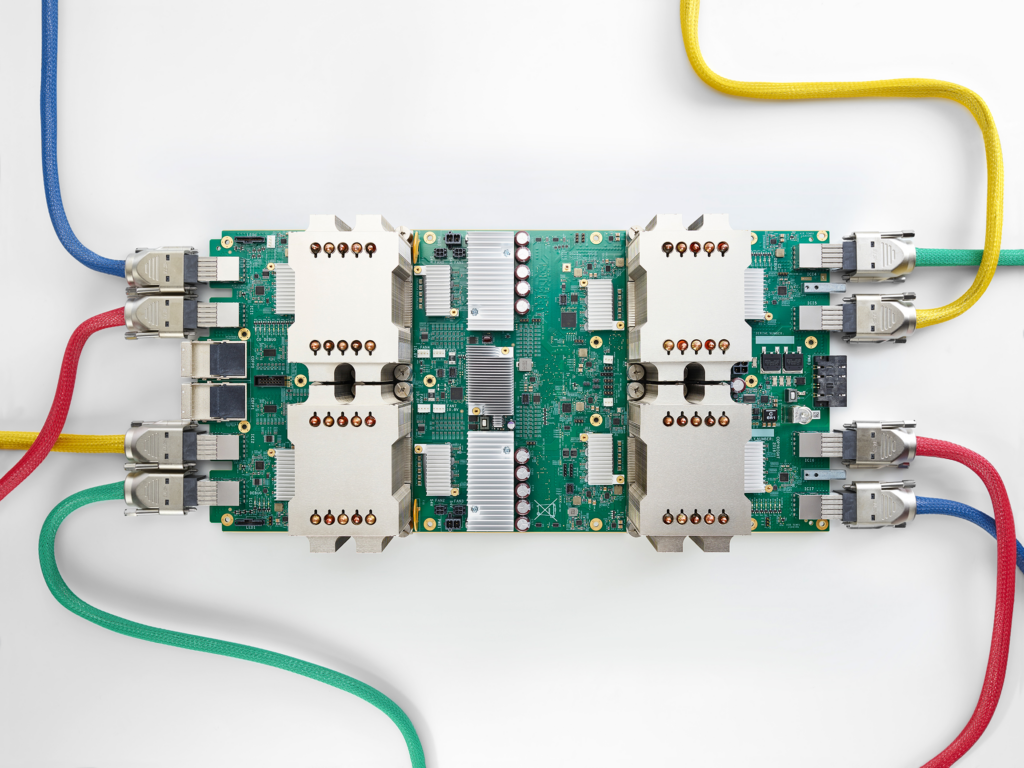

TPUs are developed by Google to accelerate the training and inference of deep learning models on the Google Cloud Platform. They are an important part of Google’s infrastructure for machine learning and AI, and they have been used to train some of the largest and most accurate deep learning models. Here is a Google TPU.

Features

Tensor processing units (TPUs) are specialized pieces of hardware that are designed to accelerate machine learning and artificial intelligence (AI) workloads. Some of the key features of TPUs include:

- High performance: TPUs are optimized for the mathematical operations commonly used in deep learning algorithms, such as matrix multiplications and convolutions. They can perform these operations quickly, making them an efficient resource for training and running machine learning models.

- Energy efficiency: TPUs are designed to be energy efficient, which makes them well-suited for large-scale machine learning tasks that require a lot of computing power.

- Scalability: TPUs can be used individually, or they can be connected to form a TPU pod, which is a group of TPUs that can be used to scale up machine learning tasks. This allows users to scale their machine learning workloads up or down as needed.

- Programmability: TPUs can be programmed using TensorFlow, an open-source machine learning framework developed by Google. This makes it easy for developers to build and deploy machine learning models on TPUs.

- Custom architecture: TPUs have a custom architecture optimized for machine learning workloads. They have a high memory bandwidth and are designed to support a large number of concurrent operations, which makes them well-suited for running machine learning models.

Limitations

While TPUs are very powerful and efficient at executing the mathematical operations that are commonly used in deep learning algorithms, they do have some limitations. Some of the main limitations of TPUs include:

- Availability: TPUs are developed by Google and are currently only available on the Google Cloud Platform. This means that they are not widely available to users who do not have access to the Google Cloud Platform.

- Compatibility: TPUs are designed to work with TensorFlow, an open-source machine learning framework developed by Google. This means they may not be compatible with other machine-learning frameworks or libraries.

- Cost: TPUs are specialized and powerful pieces of hardware, which can be more expensive than other types of hardware.

- Flexibility: TPUs are optimized for deep learning tasks and are not as flexible as other types of hardware that are more general-purpose. This means they may not be well-suited for tasks that do not involve deep learning.

- Limitations on the types of models: TPUs are optimized for deep learning models, and they may not be as effective at running other types of machine learning models.

What is Matrix Multiplication?

In mathematics, matrix multiplication is a binary operation that takes two matrices as inputs and produces another matrix as output. It is defined as follows: given two matrices A and B, the matrix product C is a matrix such that its entry in row i and column j is the dot product of row i of matrix A and column j of matrix B.

For example, suppose that A is a 3×2 matrix and B is a 2×3 matrix. The matrix product C would be a 3×3 matrix, and its entries would be computed as follows:

C[i][j] = sum(A[i][k] * B[k][j]) for all k in the range 0 to 1

In this example, the dot product of row i of matrix A and column j of matrix B would be computed for each value of k from 0 to 1, and the resulting products would be summed to produce the entry in row i and column j of matrix C.

Matrix multiplications are a fundamental operation in linear algebra and are widely used in many fields, including machine learning, computer graphics, and scientific computing. They are computationally intensive operations that require a lot of processing power, and they are often accelerated using specialized hardware such as NPUs.

What is Convolution?

In mathematics, convolution is a mathematical operation that combines two functions to produce a third function. It is defined as the integral of the product of the two functions after one is reversed and shifted.

In machine learning, convolution is a type of operation that is used to extract features from data. It involves applying a small matrix called a “kernel” or “filter” to the data and computing the dot product of the kernel with a small region of the data. This process is repeated for every possible data region, and the resulting dot products are used to create a new set of features.

Convolution is often used in image processing and computer vision tasks, where it is used to detect patterns and features in images. For example, a convolutional neural network (CNN) is designed to process data using convolutions. CNN’s are commonly used for tasks such as image classification, object detection, and segmentation. They are made up of multiple layers of interconnected nodes, and each layer applies a set of convolutions to the data to extract features.

Convolution is a computationally intensive operation that requires a lot of processing power, and it is often accelerated using specialized hardware such as NPUs.

Vendor Ecosystem

Several vendors provide neural processing units (NPUs) and tensor processing units (TPUs). Some vendors that provide NPUs include:

- Intel

- Qualcomm

- Huawei

- Samsung

- MediaTek

Tensor processing units (TPUs) are developed by Google and are only available on the Google Cloud Platform.

It is worth noting that the specific NPU or TPU offerings from these vendors may vary in terms of features, performance, and compatibility. It is advisable to research the specific offerings from each vendor to determine which one is the best fit for your needs and goals.

Summary

In conclusion, neural processing units (NPUs) and tensor processing units (TPUs) are specialized hardware accelerators that are designed to accelerate machine learning and artificial intelligence (AI) workloads. They are optimized for the mathematical operations that are commonly used in machine learning, such as matrix multiplications and convolutions, and they can be used to accelerate a wide range of machine learning tasks. Both NPUs and TPUs are highly efficient and powerful resources for machine learning, but they do have some limitations.

As machine learning and AI continue to evolve, likely, NPUs and TPUs will also evolve to become even more powerful and efficient. There is ongoing research and development in hardware acceleration for machine learning, and new technologies and approaches will likely be developed in the future. It is also possible that NPUs and TPUs will become more widely available and affordable, making them more accessible to a wider range of users. Overall, NPUs and TPUs are important tools that will continue to play a significant role in the advancement of machine learning and AI.